官网 做kafka的时候不建议用超过2.2.0的版本

# 11机器:

cd /opt/src/

src]# wget https://archive.apache.org/dist/kafka/2.2.0/kafka_2.12-2.2.0.tgz

src]# tar xfv kafka_2.12-2.2.0.tgz -C /opt/

src]# ln -s /opt/kafka_2.12-2.2.0/ /opt/kafka

src]# cd /opt/kafka

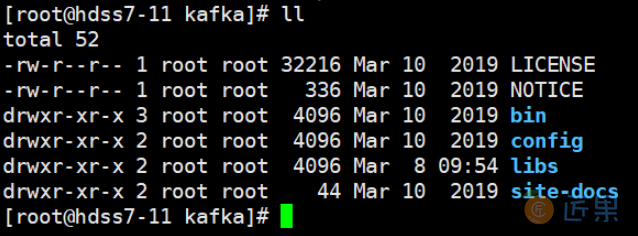

kafka]# ll

# 11机器,配置:

kafka]# mkdir -pv /data/kafka/logs

# 修改以下配置,其中zk是不变的,最下面两行则新增到尾部

kafka]# vi config/server.properties

log.dirs=/data/kafka/logs

zookeeper.connect=localhost:2181

log.flush.interval.messages=10000

log.flush.interval.ms=1000

delete.topic.enable=true

host.name=hdss7-11.host.com

# 11机器,启动:

kafka]# bin/kafka-server-start.sh -daemon config/server.properties

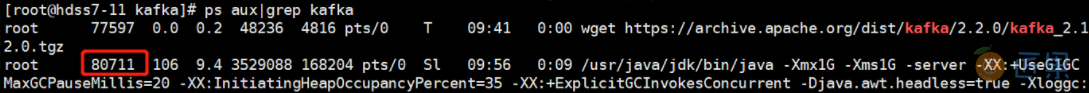

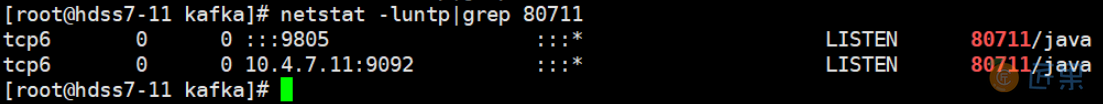

kafka]# ps aux|grep kafka

kafka]# netstat -luntp|grep 80711

部署kafka-manager

# 200机器,制作docker:

~]# mkdir /data/dockerfile/kafka-manager

~]# cd /data/dockerfile/kafka-manager

kafka-manager]# vi Dockerfile

FROM hseeberger/scala-sbt

ENV ZK_HOSTS=10.4.7.11:2181 \

KM_VERSION=2.0.0.2

RUN mkdir -p /tmp && \

cd /tmp && \

wget https://github.com/yahoo/kafka-manager/archive/${KM_VERSION}.tar.gz && \

tar xxf ${KM_VERSION}.tar.gz && \

cd /tmp/kafka-manager-${KM_VERSION} && \

sbt clean dist && \

unzip -d / ./target/universal/kafka-manager-${KM_VERSION}.zip && \

rm -fr /tmp/${KM_VERSION} /tmp/kafka-manager-${KM_VERSION}

WORKDIR /kafka-manager-${KM_VERSION}

EXPOSE 9000

ENTRYPOINT ["./bin/kafka-manager","-Dconfig.file=conf/application.conf"]

# 因为大,build过程比较慢,也比较容易失败,20分钟左右,

kafka-manager]# docker build . -t harbor.od.com/infra/kafka-manager:v2.0.0.2

# build一直失败就用我做好的,不跟你的机器也得是10.4.7.11等,因为dockerfile里面已经写死了

# kafka-manager]# docker pull 909336740/kafka-manager:v2.0.0.2

# kafka-manager]# docker tag 29badab5ea08 harbor.od.com/infra/kafka-manager:v2.0.0.2

kafka-manager]# docker images|grep kafka

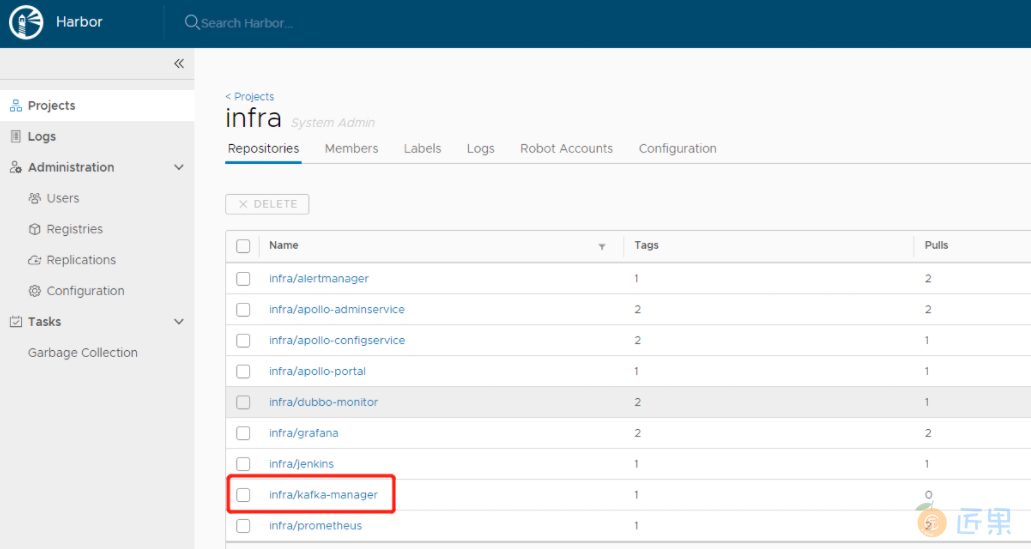

kafka-manager]# docker push harbor.od.com/infra/kafka-manager:v2.0.0.2

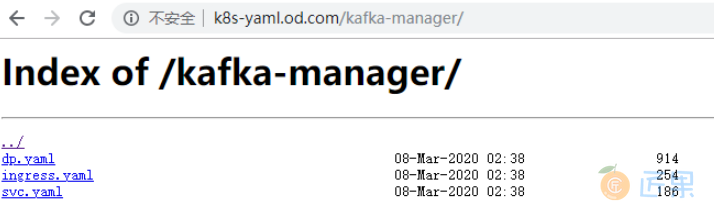

# 200机器,配置资源清单:

mkdir /data/k8s-yaml/kafka-manager

cd /data/k8s-yaml/kafka-manager

kafka-manager]# vi dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: kafka-manager

namespace: infra

labels:

name: kafka-manager

spec:

replicas: 1

selector:

matchLabels:

app: kafka-manager

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

template:

metadata:

labels:

app: kafka-manager

spec:

containers:

- name: kafka-manager

image: harbor.od.com/infra/kafka-manager:v2.0.0.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

protocol: TCP

env:

- name: ZK_HOSTS

value: zk1.od.com:2181

- name: APPLICATION_SECRET

value: letmein

imagePullSecrets:

- name: harbor

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

kafka-manager]# vi svc.yaml

kind: Service

apiVersion: v1

metadata:

name: kafka-manager

namespace: infra

spec:

ports:

- protocol: TCP

port: 9000

targetPort: 9000

selector:

app: kafka-manager

kafka-manager]# vi ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: kafka-manager

namespace: infra

spec:

rules:

- host: km.od.com

http:

paths:

- path: /

backend:

serviceName: kafka-manager

servicePort: 9000

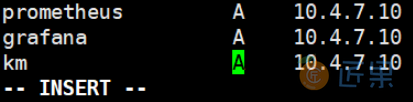

# 11机器,解析域名:

~]# vi /var/named/od.com.zone

serial 前滚一位

km A 10.4.7.10

~]# systemctl restart named

~]# dig -t A km.od.com @10.4.7.11 +short

# out:10.4.7.10

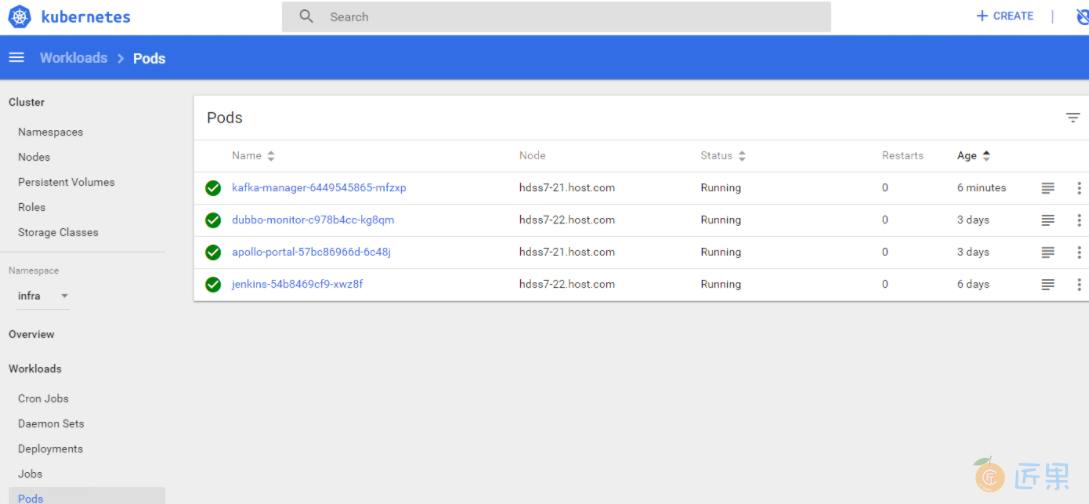

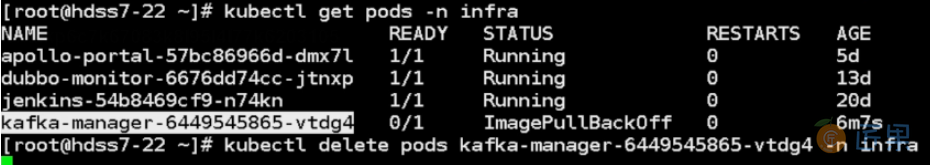

# 22机器,应用资源:

~]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/dp.yaml

~]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/svc.yaml

~]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/ingress.yaml

文件大可能起不来,需要多拉几次(当然你的资源配置高应该是没问题的)

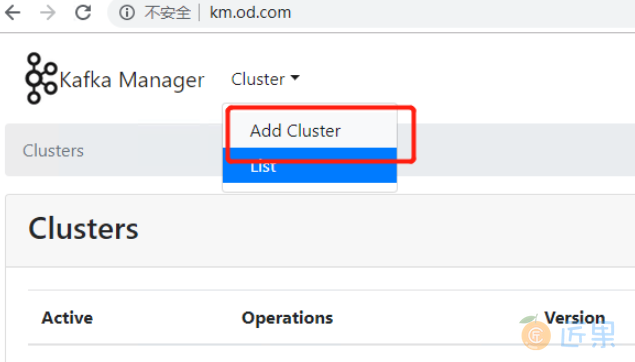

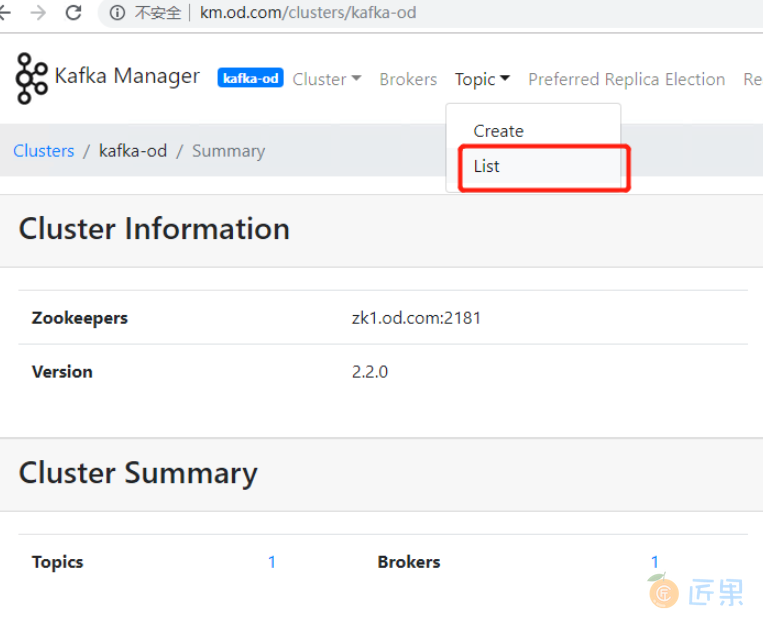

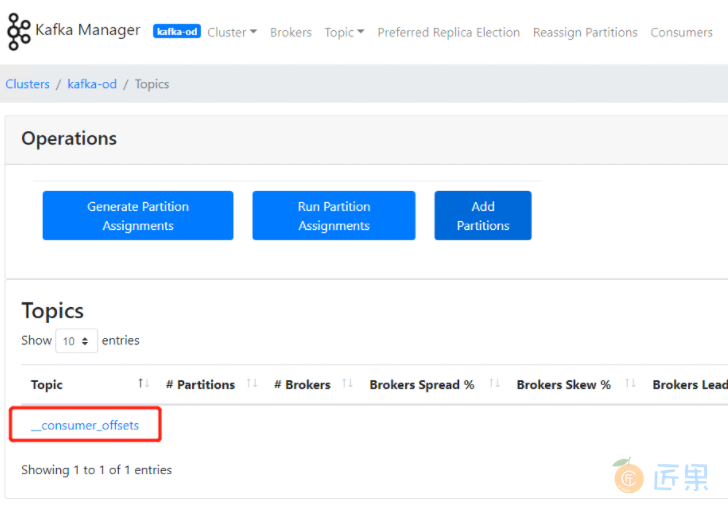

启动成功后浏览器输入km.od.com

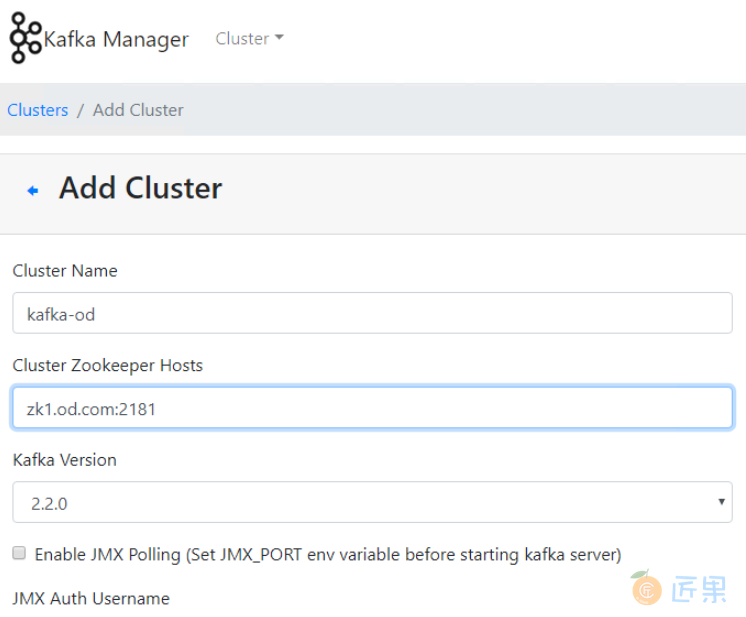

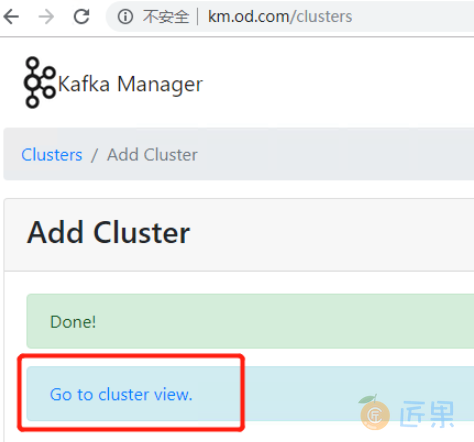

填完上面三个值后就可以下拉save了