WHAT :服务(应用)之间相互定位的过程

WHY:

- 服务发现对应的场景:

- 服务(应用)的动态性抢

- 服务(应用)更新发布频繁

- 服务(应用)支持自动伸缩

- kuberntes中的所有pod都是基于Service域名解析后,再负载均衡分发到service后端的各个pod服务中,POD的IP是不断变化的。如何解决:

- 抽象出Service资源,通过标签选择器,关联一组POD

- 抽象出集群网络,通过固定的“集群IP”,使服务接入点固定

- 如何管理Service资源的“名称”和“集群网络IP”

- 我们前面做了传统的DNS模型:hdss7-21.host.com -> 10.4.7.21

- 那么我们可以在K8S里做这样的模型:nginx-ds -> 192.168.0.1

# 现在我们要开始用交付容器方式交付服务(非二进制),这也是以后最常用的方式

# 200机器

certs]# cd /etc/nginx/conf.d/

conf.d]# vi /etc/nginx/conf.d/k8s-yaml.od.com.conf

server {

listen 80;

server_name k8s-yaml.od.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

conf.d]# mkdir /data/k8s-yaml

conf.d]# nginx -t

conf.d]# nginx -s reload

# 11机器,解析域名:

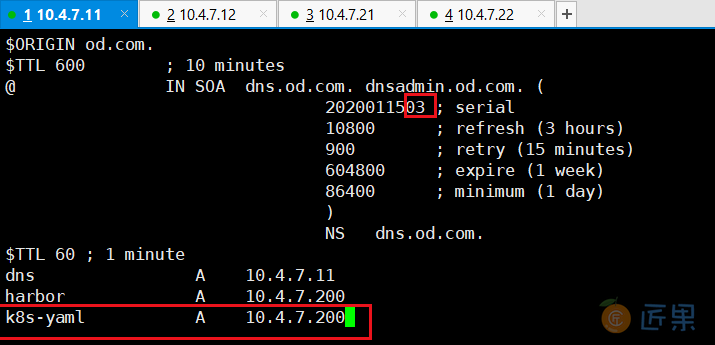

~]# vi /var/named/od.com.zone

serial 前滚一位

# 最下面添加这个网段,以后也都是在最下面添加,后面我就加这个注释了

k8s-yaml A 10.4.7.200

~]# systemctl restart named

~]# dig -t A k8s-yaml.od.com @10.4.7.11 +short

# out:10.4.7.200

dig -t A :指的是找DNS里标记为A的相关记录,@用什么机器IP访问,+short是只返回IP

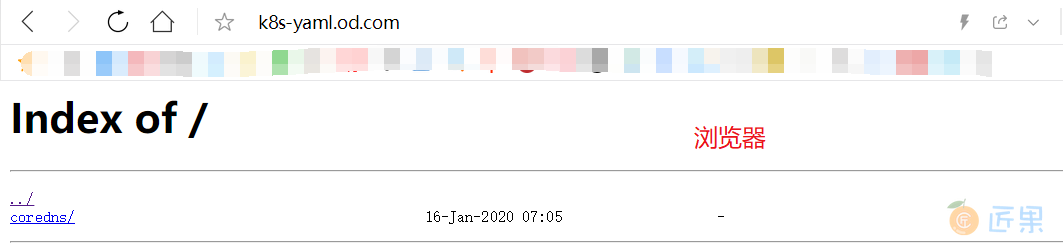

# 200机器

conf.d]# cd /data/k8s-yaml/

k8s-yaml]# mkdir coredns

# 200机器,下载coredns镜像:

cd /data/k8s-yaml/

k8s-yaml]# docker pull coredns/coredns:1.6.1

k8s-yaml]# docker images|grep coredns

k8s-yaml]# docker tag c0f6e815079e harbor.od.com/public/coredns:v1.6.1

k8s-yaml]# docker push !$

这里我们需要注意的是,任何我用到的镜像都会推到我的本地私有仓库,原因前面也说了,1、是为了用的时候速度快保证不出现网络问题,2、保证版本是同样的版本,而不是突然被别人修改了

docker push !$ :push上一个镜像的名字

# 200机器,准备资源配置清单:

cd /data/k8s-yaml/coredns

coredns]# vi rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

coredns]# vi cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 192.168.0.0/16

forward . 10.4.7.11

cache 30

loop

reload

loadbalance

}

coredns]# vi dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.od.com/public/coredns:v1.6.1

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

coredns]# vi svc.yaml

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 192.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

# 21机器,应用资源配置清单(陈述式):

~]# kubectl apply -f http://k8s-yaml.od.com/coredns/rbac.yaml

~]# kubectl apply -f http://k8s-yaml.od.com/coredns/cm.yaml

~]# kubectl apply -f http://k8s-yaml.od.com/coredns/dp.yaml

~]# kubectl apply -f http://k8s-yaml.od.com/coredns/svc.yaml

~]# kubectl get all -n kube-system

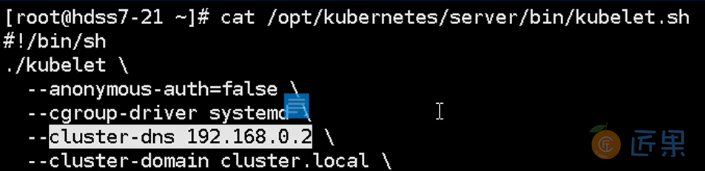

CLUSTER-IP为什么是192.168.0.2 :因为我们之前已经写死了这是我们dns的统一接入点

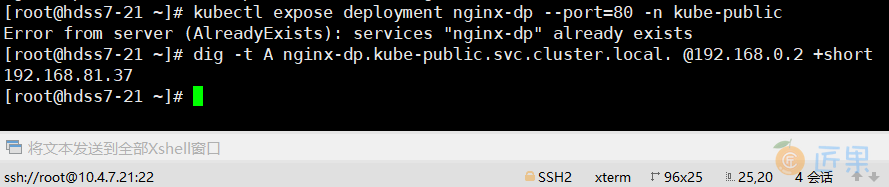

# 21机器,测试(我的已经存在了,不过不影响):

~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.7.9 -n kube-public

~]# kubectl expose deployment nginx-dp --port=80 -n kube-public

~]# kubectl get svc -n kube-public

~]# dig -t A nginx-dp.kube-public.svc.cluster.local. @192.168.0.2 +short

# out:192.168.81.37

dig -t A :指的是找DNS里标记为A的相关记录,@用什么机器IP访问,+short是只返回IP