WHAT:服务核心组件,通过pull metrics从 Exporter 拉取和存储监控数据,并提供一套灵活的查询语言(PromQL)

# 200机器,准备镜像、资源清单:

~]# docker pull prom/prometheus:v2.14.0

~]# docker images|grep prometheus

~]# docker tag 7317640d555e harbor.od.com/infra/prometheus:v2.14.0

~]# docker push harbor.od.com/infra/prometheus:v2.14.0

~]# mkdir /data/k8s-yaml/prometheus

~]# cd /data/k8s-yaml/prometheus

prometheus]# vi rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

namespace: infra

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: infra

prometheus]# vi dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "5"

labels:

name: prometheus

name: prometheus

namespace: infra

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: prometheus

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: harbor.od.com/infra/prometheus:v2.14.0

imagePullPolicy: IfNotPresent

command:

- /bin/prometheus

args:

- --config.file=/data/etc/prometheus.yml

- --storage.tsdb.path=/data/prom-db

- --storage.tsdb.min-block-duration=10m

- --storage.tsdb.retention=72h

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /data

name: data

resources:

requests:

cpu: "1000m"

memory: "1.5Gi"

limits:

cpu: "2000m"

memory: "3Gi"

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

serviceAccountName: prometheus

volumes:

- name: data

nfs:

server: hdss7-200

path: /data/nfs-volume/prometheus

prometheus]# vi svc.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: infra

spec:

ports:

- port: 9090

protocol: TCP

targetPort: 9090

selector:

app: prometheus

prometheus]# vi ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

name: prometheus

namespace: infra

spec:

rules:

- host: prometheus.od.com

http:

paths:

- path: /

backend:

serviceName: prometheus

servicePort: 9090

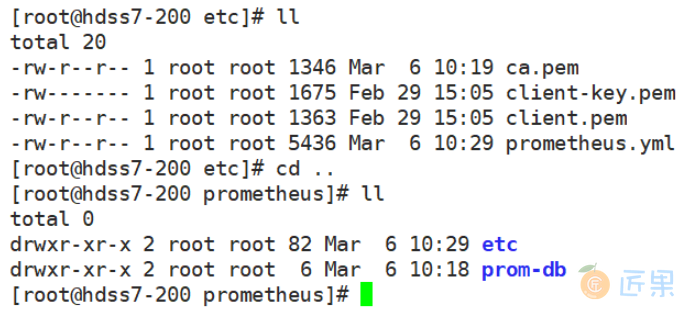

# 准备prometheus的配置文件:

prometheus]# mkdir /data/nfs-volume/prometheus

prometheus]# cd /data/nfs-volume/prometheus

prometheus]# mkdir {etc,prom-db}

prometheus]# cd etc/

etc]# cp /opt/certs/ca.pem .

etc]# cp -a /opt/certs/client.pem .

etc]# cp -a /opt/certs/client-key.pem .

etc]# prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'etcd'

tls_config:

ca_file: /data/etc/ca.pem

cert_file: /data/etc/client.pem

key_file: /data/etc/client-key.pem

scheme: https

static_configs:

- targets:

- '10.4.7.12:2379'

- '10.4.7.21:2379'

- '10.4.7.22:2379'

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:10255

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:4194

- job_name: 'kubernetes-kube-state'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]

regex: .*true.*

action: keep

- source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name']

regex: 'node-exporter;(.*)'

action: replace

target_label: nodename

- job_name: 'blackbox_http_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: http

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+);(.+)

replacement: $1:$2$3

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'blackbox_tcp_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [tcp_connect]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: tcp

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'traefik'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme]

action: keep

regex: traefik

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

cp -a :在复制目录时使用,它保留链接、文件属性,并复制目录下的所有内容

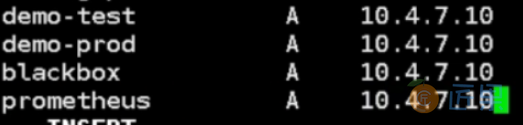

# 11机器, 解析域名,有ingress就有页面就需要解析:

~]# vi /var/named/od.com.zone

serial 前滚一位

prometheus A 10.4.7.10

~]# systemctl restart named

~]# dig -t A prometheus.od.com @10.4.7.11 +short

# out:10.4.7.10

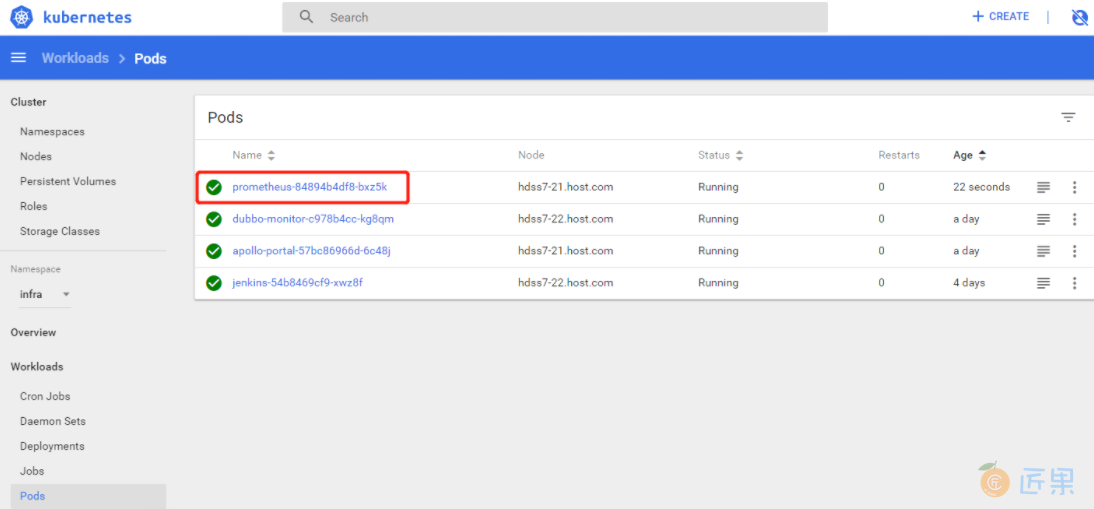

# 22机器,应用配置清单:

~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/rbac.yaml

~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/dp.yaml

~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/svc.yaml

~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/ingress.yaml

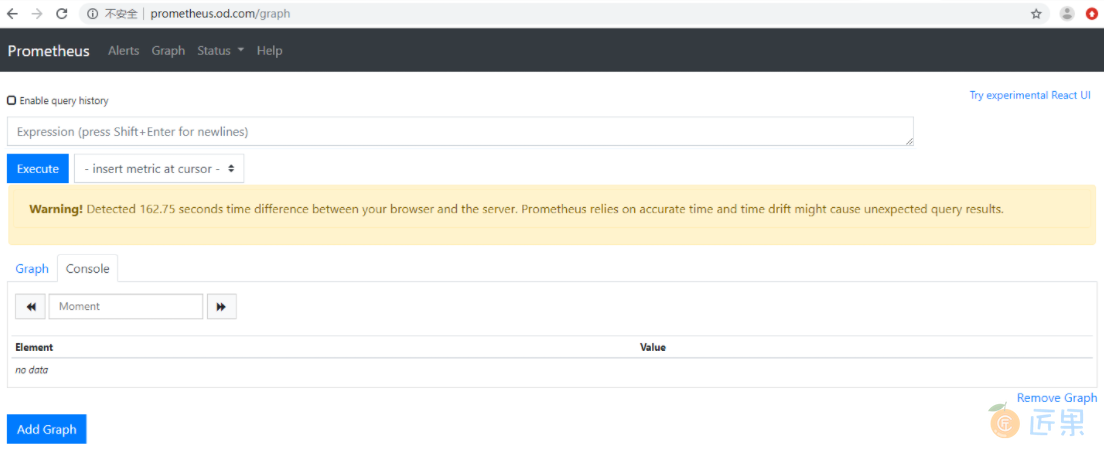

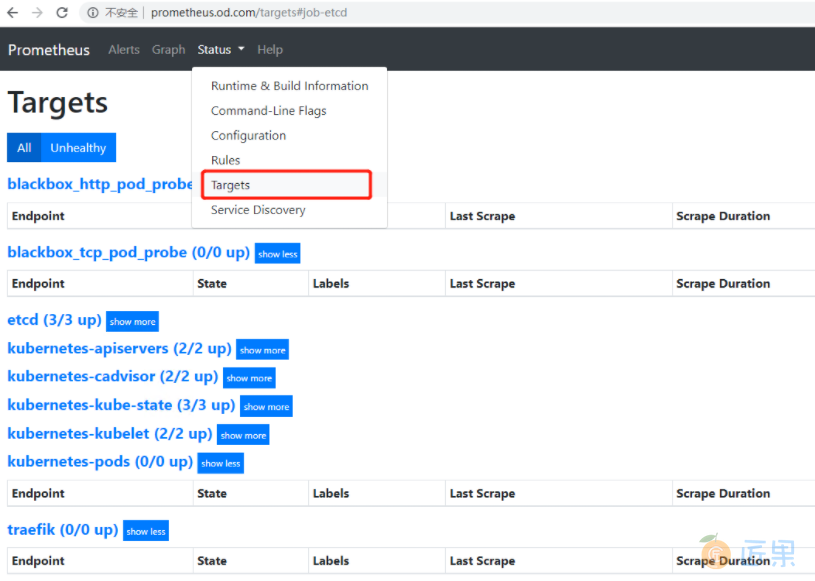

这就是Prometheus自带的UI页面,现在你就知道为什么我们需要Grafana来替代了,如果你还不清楚,等下看Grafana的页面你就知道了